A single flawed AI response can cascade across thousands of customer interactions within seconds. What looks like a small dip in accuracy or a minor misclassification can quickly lead to frustration, broken trust, and revenue loss.

As organizations increasingly rely on AI across chat, voice, and real-time agent assistance, the stakes have never been higher.

Unlike traditional software, AI brings a set of challenges that are harder to predict. Models may return inaccurate answers, misroute customer intent, or misread tone and sentiment. Performance can also decline gradually as data patterns shift, even when the underlying code remains unchanged.

A recent MIT study highlights the scale of the problem: only 5% of enterprise-grade generative AI system pilots move from evaluation into production. This high failure rate shows why observability is essential for AI-driven CX.

By using observability, teams can closely monitor performance, identify irregularities early, and understand where problems originate. The same visibility also creates the trust that both customers and regulators expect.

In this blog, we’ll explore how to apply observability by tracking key metrics, setting meaningful alerts, and using insights to improve AI interactions.

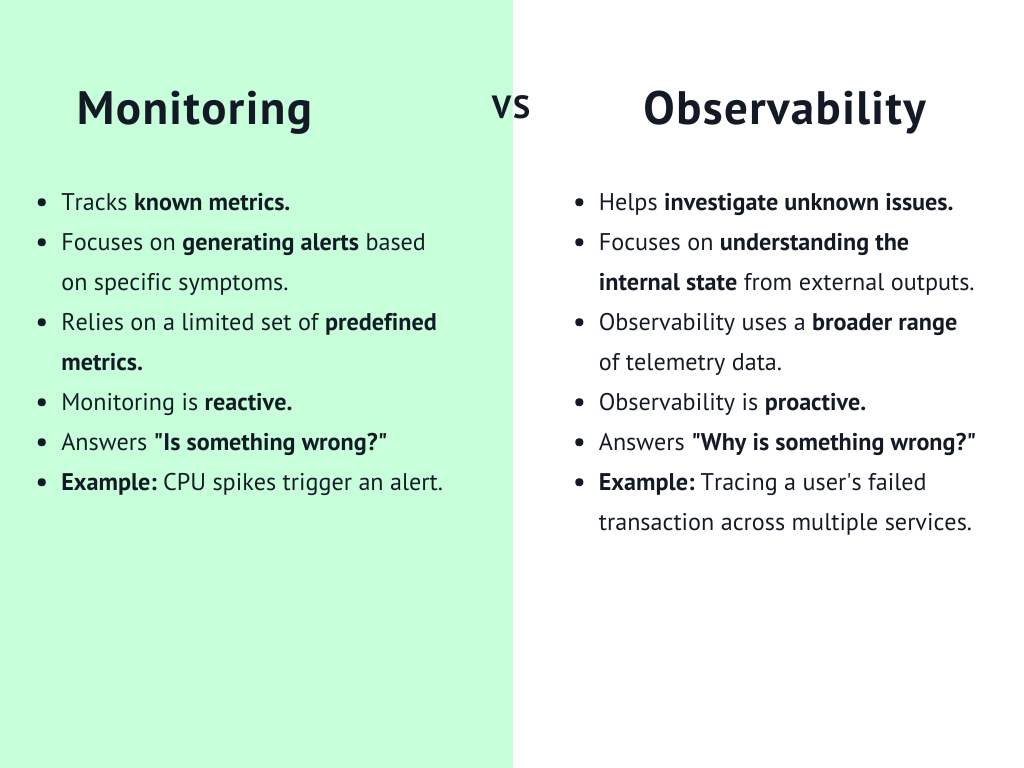

From Monitoring to Observability: What’s the Difference?

- Monitoring tracks metrics such as latency, uptime, and error rates. It gives visibility into whether systems are performing as expected but does not explain the reasons behind issues.

- Observability goes further by using logs, traces, and telemetry to explain system behavior. It connects data points with context, showing how components interact and why problems arise. This deeper view allows teams to diagnose and resolve issues more effectively.

In customer experience, the difference is significant. Monitoring can show that response times are slowing. Observability explains why, whether it’s a misread intent, model drift, or a recent update. With that insight, leaders can ask why the AI produced a certain reply or why sentiment dropped, then address the root cause.

The CX-Specific Challenges of AI Observability

AI-driven customer experience introduces challenges that go beyond traditional monitoring. Four areas are especially important for leaders to address:

1. Limited Visibility in Black-Box Models

Large language models act like black boxes, giving scarce details on how responses come to be formed. It is difficult to pinpoint the exact input or process that caused the error when an error occurs. That gap in clarity slows fixes and raises governance risks.

2. Complexity of Dynamic Interactions

Customer interactions carry their own context: past history, tone of voice, channel used, and more.

The range of scenarios makes it challenging to pinpoint performance issues to a single source. Standard measures like latency or uptime may suggest everything is normal, while customers face problems that go unnoticed.

3. Impact of Drift and Bias on Performance

Models built on historical data often weaken as customer behavior, language, and business needs evolve. Accuracy drops with data or concept drift, and bias in training or usage can lead to uneven or unfair results.

4. Risk of False Confidence in AI Responses

AI systems often generate answers that appear fluent and confident but are factually incorrect. These errors are harder to detect than obvious failures and can quietly erode customer trust.

Strong observability practices are necessary to identify and address them before they impact a large number of interactions.

Key Components of Observability in AI-Driven CX

An AI-powered customer experience relies on several parts working together for observability. Combined, they give teams the visibility to see not just what is happening but also why.

1. Metrics

Metrics track performance at a high level. Key CX metrics include latency, errors, deflection, and escalations.

According to a 2024 survey, 71% of CX teams already monitor resolution time as a core metric, while others track first response time (59%), CSAT (53%), and revenue impact (41%). Tracking them consistently builds a baseline, helping teams catch shifts early.

2. Logs

Logs record detailed events and context. For AI systems, these include customer inputs, the reasoning or decision steps taken by the model, and the triggers that led to escalation. Teams rely on logs for investigating specific incidents and uncovering root causes that metrics alone cannot explain.

3. Traces

Traces capture the full customer journey, starting with the AI’s first reply and ending with resolution. They reveal how requests move through the system, make dependencies clear, and point out bottlenecks or failures across connected parts.

4. Feedback Loops

Feedback loops bring human evaluation back into the system. They capture customer sentiment scores, annotations from quality assurance teams, and model confidence levels.

In practice, CX Network reported that nearly 60% of CX teams rely on NPS, 56% on CSAT, and 49% on customer retention. This feedback validates whether AI responses meet expectations and helps identify areas where retraining or fine-tuning is required.

Setting Up Alerts That Actually Matter

Alerts should call out issues that affect customers directly, not drown teams in noise. In AI-driven CX, the priority is on thresholds that indicate when experience quality changes and demand prompt action.

1. Prevent Alert Fatigue by Focusing on Customer Impact

A high volume of alerts can dilute attention and slow response. Teams should give priority to alerts connected to metrics that shape the customer journey. This could mean tracking sharp increases in agent escalations, unexpected drops in deflection rates, or clear declines in Net Promoter Score (NPS).

2. Use Clear Trigger Conditions

Alerts are most useful when based on conditions that show meaningful shifts in performance. Some examples include:

- Escalation requests rising well above normal levels

- Deflection rates decreasing sharply, suggesting that customers are not getting answers from AI systems

- NPS or sentiment scores falling after a deployment or configuration change

These signals provide early warnings of service degradation that may undermine satisfaction and trust.

3. Define SLOs and SLAs as Alert Boundaries

Service Level Objectives (SLOs) and Service Level Agreements (SLAs) give clear benchmarks.

An SLO might require 95% accuracy in replies or keep escalations under a threshold. Crossing those lines should trigger alerts, giving teams a chance to act before customers are affected.

4. Apply Anomaly Detection to Strengthen Alerts

Anomaly detection identifies behavior that deviates from normal ranges. It compares what is happening now with historical data. Alerts built on these insights bring real problems to the surface sooner while filtering out minor changes.

Optimizing Interactions with Continuous Insights

A study published on ResearchGate found that start-ups in Indonesia adopting AI for customer service achieved a 20% reduction in operational costs while also improving response speed and accuracy.

Observability is most valuable in this regard. Real-time feedback enables teams to adjust AI responses, reduce inefficiencies, and enhance customer satisfaction as interactions occur.

1. Refine Prompts and Responses

Observability data highlights patterns where prompts or responses fall short. If customer inputs consistently lead to irrelevant or incomplete answers, logs and traces help uncover the weaknesses.

A study on arXiv reported that introducing a generative AI assistant improved worker productivity by 15% on average in customer support tasks. This highlights how refined responses, informed by observability insights, can drive efficiency.

2. Improve Routing Rules

Customer journeys often involve multiple handoffs across AI modules or between AI and human agents. Traces make it possible to see where routing rules break down. When analysis shows frequent misrouting or unnecessary escalations, routing logic can be adjusted to direct interactions more efficiently.

3. Identify Training Gaps

Metrics and logs often reveal recurring points of failure, indicating gaps in the training data. Issues such as rare intent types, linguistic variations, or underrepresented customer segments can reduce accuracy. Continuous observability helps flag these weaknesses, enabling teams to expand datasets and improve model coverage.

4. Guide Agent Coaching with Post-Interaction Analytics

By combining sentiment analysis, QA annotations, and model confidence scores, managers can review specific cases to understand what went well and what did not. These findings support targeted coaching for human agents and highlight opportunities to fine-tune AI responses.

The Future: Observability as a CX Differentiator

Observability plays a central role in customer experience as AI takes over more frontline roles.

Gartner reports that 85% of customer service leaders plan to explore or pilot a customer-facing generative AI solution in 2025, underlining how AI is quickly moving from concept to everyday use. Companies that see it only as a compliance task put themselves at risk.

1. Observability as a Strategic Imperative

AI now drives a significant portion of customer interactions. The effectiveness of agentic AI systems depends heavily on observability to ensure trust, reliability, and alignment with business goals. Without it, companies face blind spots that weaken the overall experience and reduce resilience.

According to a 2023 report by the Enterprise Strategy Group, 78% of organizations already have some observability practices in place, showing that most businesses recognize its strategic importance.

2. Building Trust, Loyalty, and Efficiency through Observability

Customers are more likely to trust interactions when systems are transparent and dependable.

Observability helps with this by providing teams with the ability to trace problems, explain results, and take swift action. By identifying irregularities early, lowering escalations, and maintaining consistent workflows, it increases internal efficiency.

3. The Rise of Explainability and Observability Convergence

The industry is seeing observability and explainability converge. Observability highlights system behavior, and explainability provides the logic behind outcomes. When combined, they give organizations a complete view of events and their causes.

The need for this balance will grow as AI manages more frontline interactions. Gartner projects that by 2029, agentic AI will autonomously resolve 80% of routine customer service issues.

To prepare, enterprises will need clear audit trails. They must also confirm that results align with intent and keep full traceability across models and datasets.

How Kapture Helps Enterprises with AI Observability

Kapture CX incorporates observability across its AI Agent Suite, spanning self-service, agent assist, and post-interaction intelligence. This allows enterprises to deploy AI with built-in capabilities to monitor, measure, and improve performance with clarity and trust.

Enterprises using Kapture CX can:

- Use dashboards to watch AI agents live, tracking interactions, intent accuracy, sentiment changes, and conversation health

- Create alerts for failed intents, stuck flows, or higher-than-normal escalation rates. This helps teams act before customer impact grows

- Use feedback-driven insights such as sentiment analysis, QA annotations, and analytics. These highlight where prompts, routing, or training data need improvement

The outcome is a more autonomous customer experience where operations run efficiently, customers feel supported, and transparency strengthens trust.

Request a personalized demo to see how Kapture CX brings observability to life in AI-driven customer experience!

FAQs

Monitoring tells whether systems are in place by tracking key metrics, such as latency. Observability goes further by using logs, metrics, and traces to detect the causes of issues and their effects on customer interactions.

AI systems can make mistakes, from misclassification to drift or bias. Observability brings these issues to light in real time, giving teams the chance to act quickly and protect both trust and results.

Important measures include latency, error rates, intent deflection, and escalation levels. When combined with sentiment analysis and quality checks, these indicators highlight deviations from expected performance.

Early anomaly identification, better routing, targeted agent coaching, and AI prompt enhancement are all made possible by observability. By ensuring accurate and dependable interactions, these procedures foster the development of enduring trust and enhance client loyalty.